Performance

Optimizing your merge queue for maximum efficiency.

As development teams scale and the volume of pull requests grows, achieving an optimal balance between merge speed, reliability, and resource usage becomes a paramount concern. Performance bottlenecks in the merge process can significantly hamper a team’s productivity. Leveraging the capabilities of Mergify’s merge queue configurations can help alleviate these challenges. This guide dives deep into how you can tune your merge queue to strike the right balance, ensuring a fast, cost-efficient, and reliable merging process tailored to your team’s needs.

The Trade-offs: Reliability, Cheapness, and Velocity (RCV Theorem)

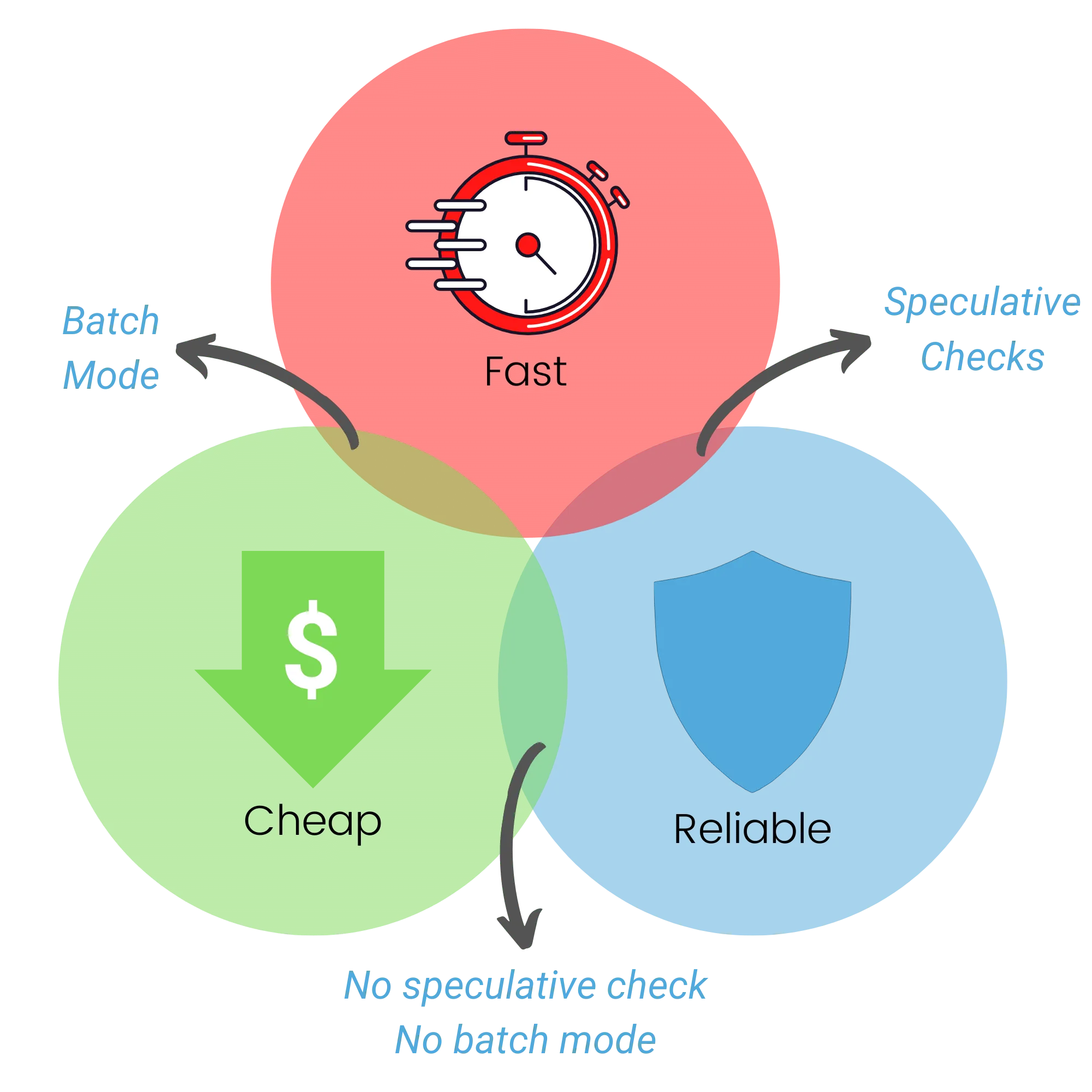

Section titled The Trade-offs: Reliability, Cheapness, and Velocity (RCV Theorem)Before making any decision in configuring your merge queue, you need to understand the trade-off that needs to be done. In the world of merging, three critical properties influence the effectiveness of a merge queue:

- Reliability: Ensure merges are accurate and won’t cause issues.

- Cheapness: Minimize the number of CI jobs executed.

- Velocity: Increase throughput and decrease latency.

Similar to the CAP theorem for data stores, you can only optimize two of these properties simultaneously. This is what we term the RCV Theorem.

Based on this trade-off, there are 3 scenarios that you can choose from:

-

Reliable and Cheap: This is the standard behavior, where each pull request is validated sequentially, ensuring reliability at a minimal CI cost. As every pull request is tested one after the other, there is no room for wasted CI time. However, this scenario is slow as only one PR is tested at a time.

-

Reliable and Fast: If you aim to reduce latency and maximize throughput without considering CI costs, enable speculative checks. This feature lets Mergify test pull requests in parallel, predicting potential merges and executing CI runs simultaneously. Speculative checks can slightly increase CI cost: there might be certain scenarios that are tested and whose results won’t be used if a pull request ahead in the queue fails.

-

Cheap and Fast: By using batch mode, you can merge groups of pull requests simultaneously. This reduces CI runs but merges pull request that are not tested individually. There is therefore a theoretical risk than a hidden failure is merged, while the whole batch passes the CI.

You can also combine speculative checks and batching to achieve a balanced approach between reliability, cheapness, and velocity. This configuration offers a mix of both strategies, allowing you to test multiple batches of requests concurrently.

Determining the Right Configuration for Speculative Checks and Batching

Section titled Determining the Right Configuration for Speculative Checks and BatchingOptimizing the performance of your merge queue involves fine-tuning the number of speculative checks and the size of batches. The right configuration balances throughput, latency, and CI resource consumption. Here’s a guide to help you determine the optimal settings:

-

Expected Merge Throughput: Analyze your historical data to gauge the average number of merges per hour or per day. This will help set a benchmark for speculative checks and batch size, ensuring that pull requests are processed at the desired rate.

-

Queue Latency: Consider the typical wait time in the queue for a PR. Aim for settings that reduce this latency, but be mindful of the trade-offs. Reducing latency might lead to increased CI consumption or decreased reliability.

-

Peak Load Periods: Observe patterns to identify times when there’s a surge in PR merges, such as during active developer hours. Adjust your settings to handle these peak periods efficiently, ensuring that the merge queue remains effective during high activity periods.

-

CI Resource Availability: Evaluate the resources allocated to your CI environment. If resources are abundant, you can lean towards higher speculative checks. Conversely, if resources are limited, consider a conservative approach to ensure that CI doesn’t become a bottleneck.

-

CI Job Duration: The execution time of CI jobs can significantly influence your choice. Faster CI jobs might permit a higher number of speculative checks, as potential reruns won’t lead to major delays. On the other hand, longer CI jobs necessitate a more conservative setting.

-

Stability of Changes: Reflect on the typical quality of pull requests in your repository. For repositories with a high rate of stable PRs, you might increase speculative checks or batch sizes. However, for those with frequent unstable PRs, a conservative approach might be more suitable.

-

Team Size & Activity Patterns: The size of your team and their activity patterns can also dictate your settings. Larger or globally distributed teams might have pull requests coming in throughout the day. Understanding these patterns can help in configuring the merge queue for optimal performance.

-

Feedback Loop for Developers: Ensure that the chosen configuration promotes a quick feedback loop. While speculative checks and batching can enhance queue performance, they shouldn’t delay critical feedback to developers about the state of their PR.

By carefully considering and balancing these factors, you can configure your merge queue to be both efficient and reliable. Remember, the right balance may vary over time as your team grows and your development processes evolve. Periodic reviews and adjustments can help maintain an optimal merge queue performance.

Performance Configuration Calculator

Section titled Performance Configuration CalculatorOptimizing your merge queue is a balancing act between throughput and CI resource allocation. Our calculator is here to guide you in configuring the optimal settings tailored to your team’s workflow. Here’s how to use it:

-

CI time in minutes: Input the average time it takes for your Continuous Integration to validate a change.

-

Estimated CI success ratio in %: Provide an estimate of how often your CI process returns a successful result. For instance, if your CI passes 90 out of 100 times on average, you’d input 90%.

-

Desired PRs to merge per hour: Set your target for how many pull requests you’d like to merge within an hour at minimum.

-

Desired CI usage in %: Define how intensively you’d like to utilize your CI resources. A setting of 100% indicates a standard usage, matching a regular merge queue. Values below 100% will aim to conserve CI resources by leveraging batching, while values above 100% will prioritize higher throughput and reduced latency, even if it means using more CI time than usual.

Once you’ve input your parameters, the calculator will suggest the optimal configuration for your merge queue, ensuring an efficient and seamless merging process.

Optimizing Merge Queue Time with Efficient CI Runs

Section titled Optimizing Merge Queue Time with Efficient CI RunsTo ensure your merge queue processes efficiently, it’s essential that your Continuous Integration (CI) system runs as quickly as possible. One way to achieve this is by meticulously selecting the tests you run, ensuring that only necessary tests are executed. Remember, every minute saved in CI time can have a cascading positive effect on your overall merge efficiency.

A strategic approach to further optimize CI runtime is the Two-Step CI method. This approach differentiates between:

-

Preliminary Tests: These are the tests run immediately when a PR is created or updated. They’re designed to be quick yet effective, ensuring only quality PRs enter the merge queue.

-

Comprehensive Tests: These tests are more exhaustive and are run just before merging, ensuring the final quality of the code.

By splitting your tests in this manner, you ensure that the merge queue is not held up by lengthy CI processes for every minor PR update. Instead, the more extensive tests are reserved for when PRs are about to be merged, providing a balance between speed and code quality.

Concluding Thoughts

Section titled Concluding ThoughtsMergify provides a range of configurations to tailor your CI budget and merge queue strategy. Whether you’re aiming for speed, cost-efficiency, or reliability, our platform caters to diverse requirements. With Mergify, the merging process becomes easier, faster, and safer, boosting your team’s performance.